I'm currently a postdoctoral scholar in the Stanford Translational AI (STAI) lab led by Professor Ehsan Adeli. I earned my PhD in computer science at UNC Chapel Hill under the advisement of Professor Henry Fuchs. My research interests are at the intersection of health AI, computer vision, and machine learning. Currently, I'm working toward a future where next-generation healthcare systems improve the entire patient journey, from advanced diagnostic imaging and surgical support to all-day health monitoring and management, to achieve better therapeutic outcomes for cancer and aging-related diseases. I'm generally interested in opportunities that would allow me to continue to deepen my research expertise while leading and working on projects with meaningful, positive real-world impact, especially with respect to areas such as healthcare and environmental sustainability.

Previously, I was a visiting researcher at IDSIA USI-SUPSI working with Professor Piotr Didyk on the interpretability of multimodal language models (MLMs) with respect to capabilities such as visual perception. I've published in leading venues on topics such as remote health sensing (WACV, NeurIPS), 3D reconstruction (ECCV, MICCAI), LLM-based conversational agents for personal health (EMNLP, Nature Communications), and energy-efficient operation of smart glasses (ISMAR). I've previously done internships at Google AR/VR, Google Consumer Health Research, and Kitware.

NewsResearch

Please see my Google Scholar for a full, more up-to-date list of my publications.

The Anatomy of a Personal Health Agent

arXiv 2025

To provide personalized health guidance in daily life, we developed the Personal Health Agent (PHA), a multi-agent framework that reasons over data from consumer wearables and personal health records. Grounded in a user-centered analysis of real-world needs, PHA integrates three specialist agents—a data scientist, a health expert, and a health coach—to deliver tailored insights and guidance. An extensive evaluation across 10 benchmark tasks, involving over 7,000 expert and user annotations, validates the framework and establishes a strong foundation for future personal health applications.

"What's Up, Doc?": Analyzing How Users Seek Health Information in Large-Scale Conversational AI Datasets

EMNLP 2025 (Findings)

In order to better understand how people use LLMs when seeking health information, we created HealthChat-11K, a dataset of 11,000 real-world conversations. Subsequent annotation and analysis of user interactions across 21 health specialties reveals common user interactions and enables informative case studies on incomplete context, affective behaviors, and leading questiions. Our findings highlight significant risks when seeking health information with LLMs and underscore the need to improve how conversational AI supports healthcare inquiries.

RADAR: Benchmarking Language Models on Imperfect Tabular Data

NeurIPS 2025

To address language models' poor handling of data artifacts, the RADAR benchmark was created to evaluate data awareness on tabular data. Using 2,980 table-query pairs grounded in real-world data spanning 9 domains and 5 data artifact types, RADAR finds that model performance drops significantly when artifacts like missing values are introduced. This reveals a critical gap in their ability to perform robust, real-world data analysis.

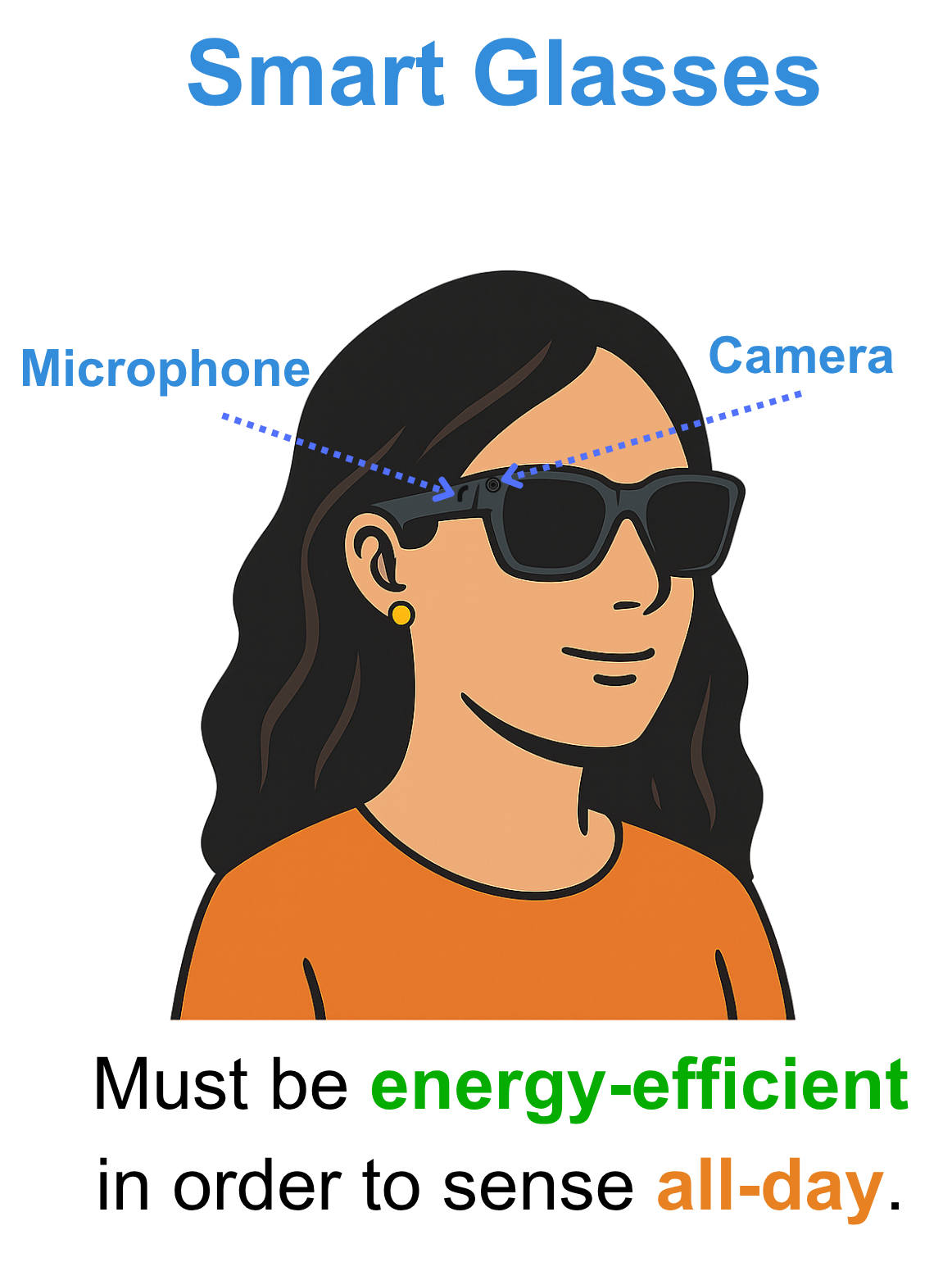

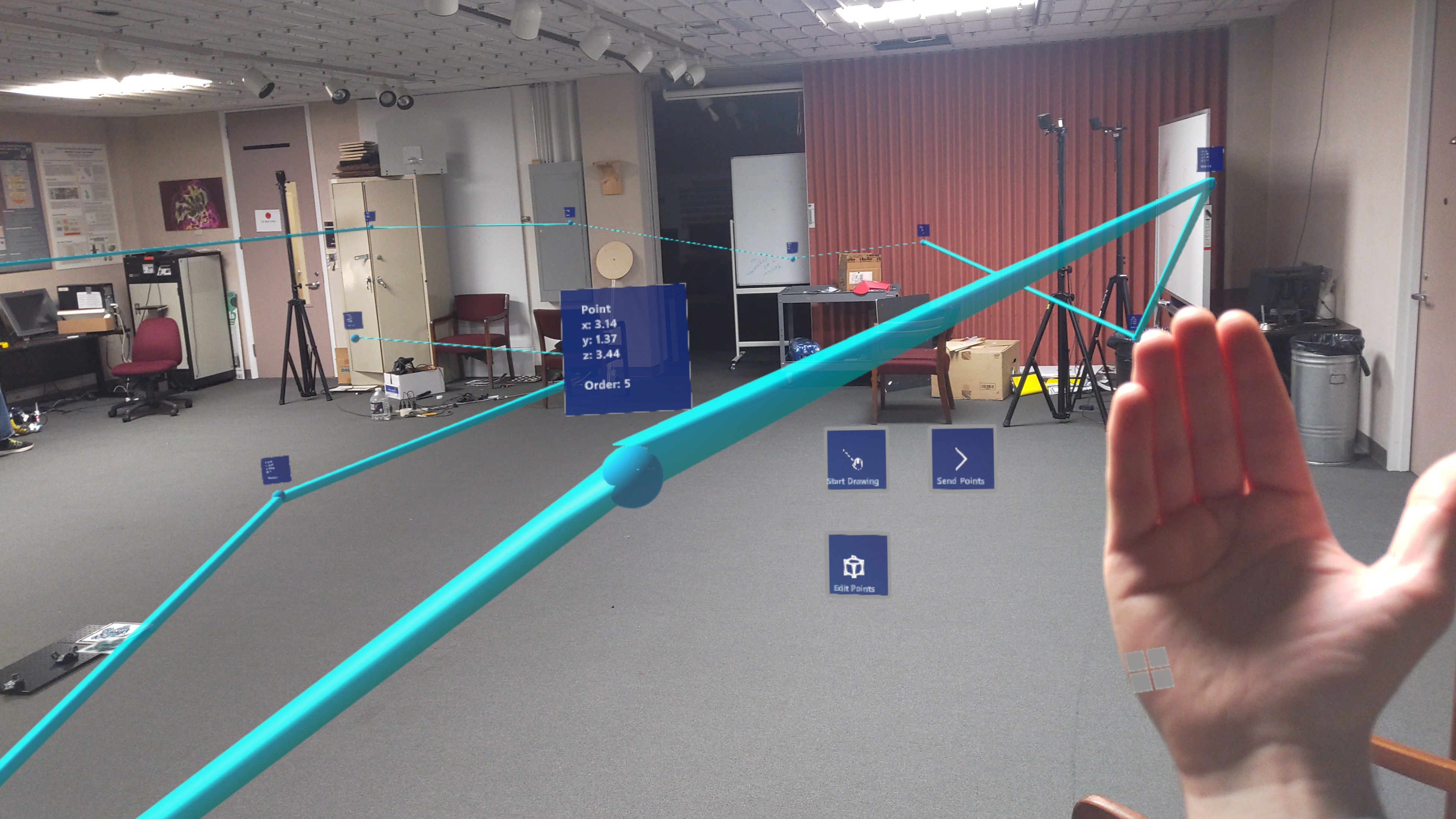

EgoTrigger: Toward Audio-Driven Image Capture for Human Memory Enhancement in All-Day Energy-Efficient Smart Glasses

ISMAR 2025 (TVCG Journal Paper)

EgoTrigger uses a lightweight audio classification model and a custom classification head to trigger image capture from hand-object interaction (HOI) audio cues, such as the sound of a medication bottle being opened. Our approach is capable of using 54% fewer frames on average while maintaining or exceeding performance on an episodic memory task, signifying potential for energy-efficient usage as a part of all-day smart glasses capable of human memory enhancement.

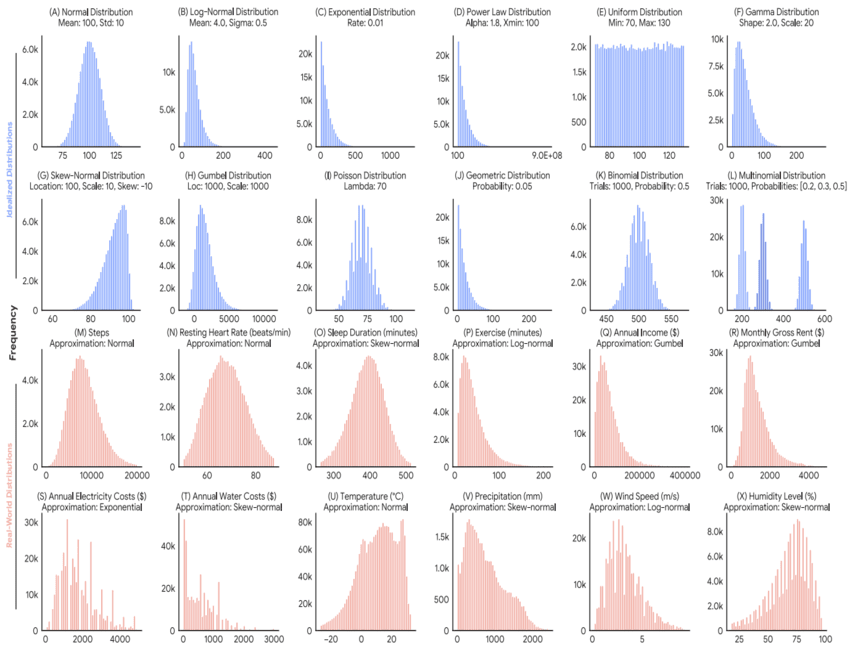

What Are the Odds? Language Models Are Capable of Probabilistic Reasoning

EMNLP 2024 (Main)

Language models were evaluated on probabilistic reasoning tasks such as estimating percentiles and calculating probabilities using idealized and real-world distributions. Techniques including within-distribution anchoring and simplifying assumptions significantly improved LLM performance by up to 70%.

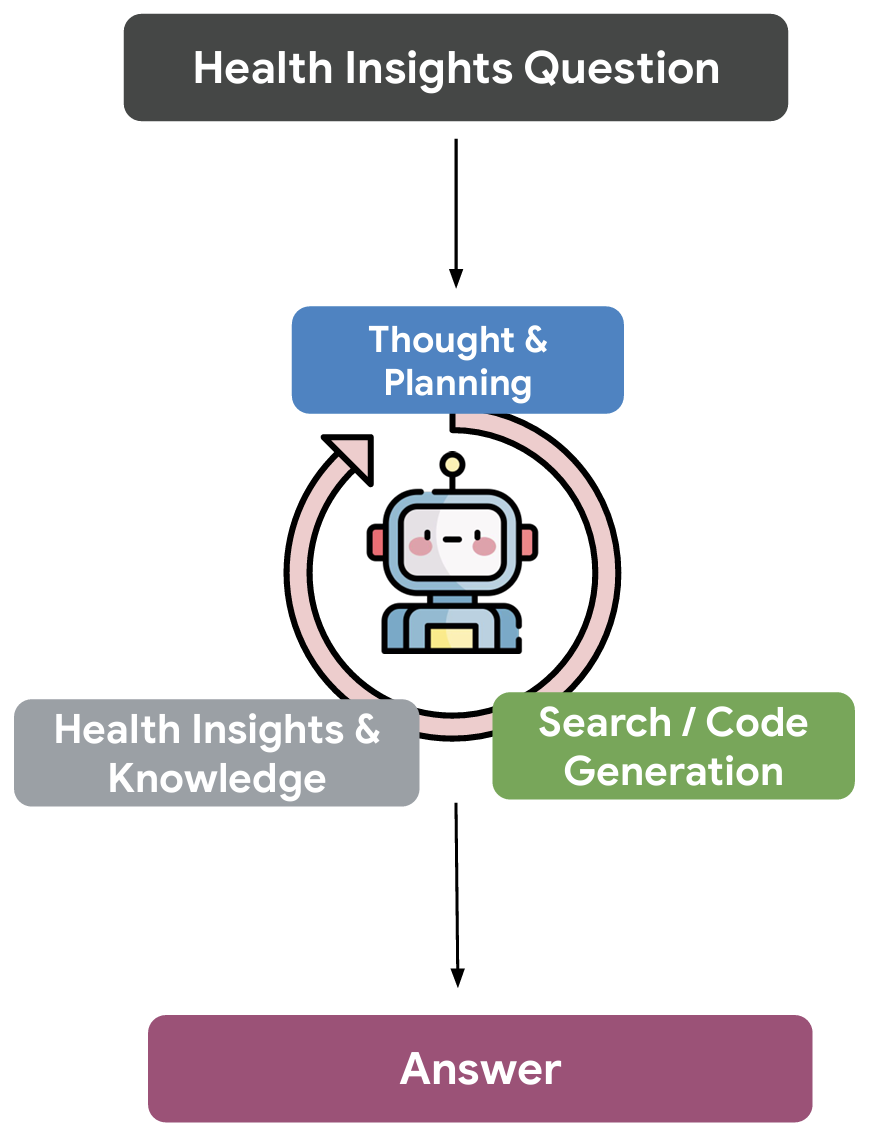

Transforming Wearable Data into Health Insights using Large Language Model Agents

Nature Communications 2025 (Conditionally Accepted)

The Personal Health Insights Agent (PHIA) leverages large language models with code generation and information retrieval tools to enable personalized insights from wearable health data, an ongoing challenge. Evaluated on over 4000 questions, PHIA accurately answers over 84% of factual numerical and 83% of open-ended health questions, paving the way for accessible, data-driven personalized wellness.

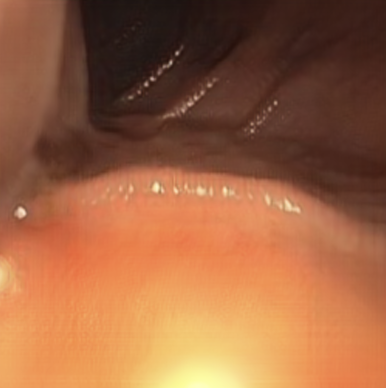

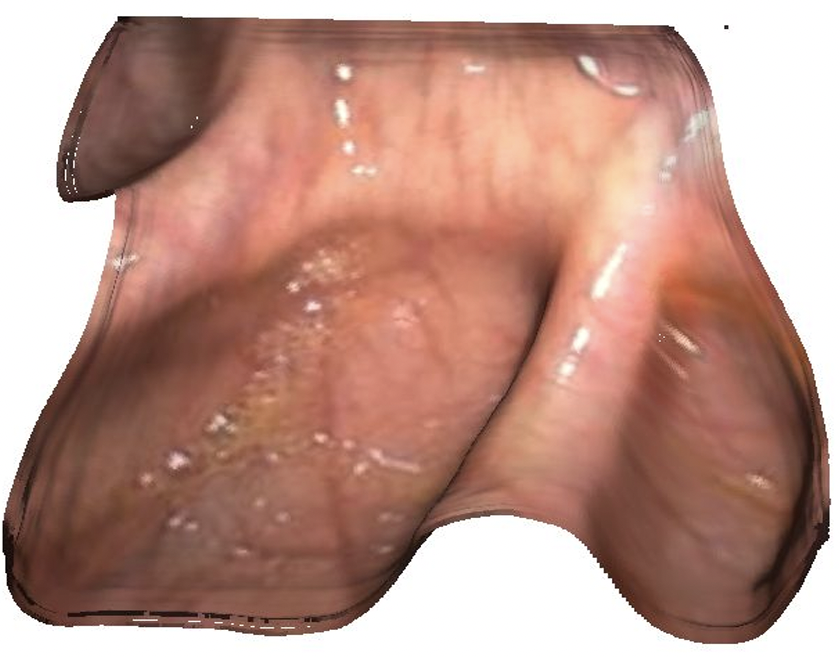

Structure-preserving Image Translation for Depth Estimation in Colonoscopy Video

MICCAI 2024 (Oral)

To address the domain gap in colonoscopy depth estimation, a structure-preserving synthetic-to-real image translation pipeline generates realistic synthetic images that retain depth geometry. This approach, aided by a new clinical dataset, improves supervised depth estimation and generalization to real-world clinical data.

Leveraging Near-Field Lighting for Monocular Depth Estimation from Endoscopy Videos

ECCV 2024

Near-field lighting in endoscopes is modeled as Per-Pixel Shading (PPS) to achieve state-of-the-art depth refinement on colonoscopy data. This is accomplished using PPS features with teacher-student transfer learning and PPS-informed self-supervision.

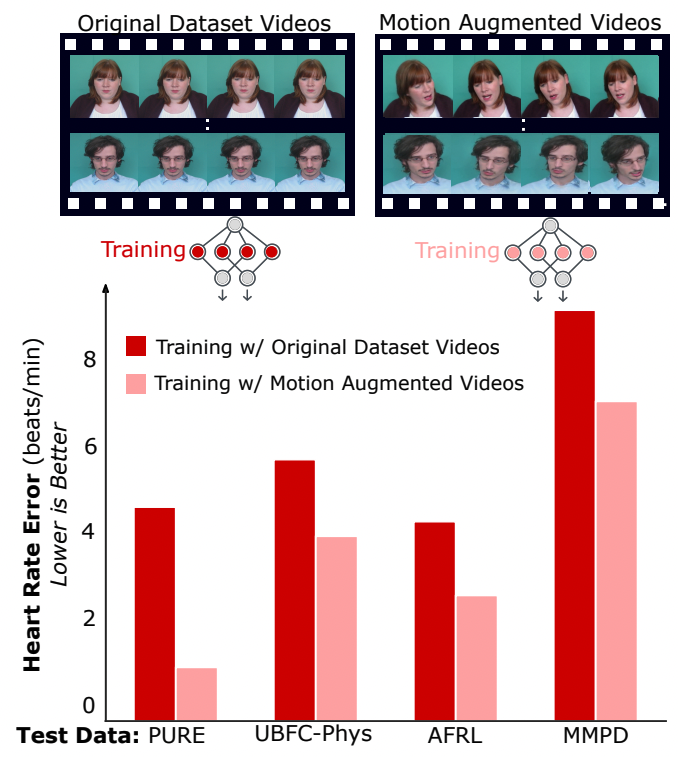

Motion Matters: Neural Motion Transfer for Better Camera Physiological Measurement

WACV 2024 (Oral)

Neural Motion Transfer is presented as an effective data augmentation technique for estimating PPG signals from facial videos. This approach significantly improved inter-dataset testing results by up to 79% and outperformed existing state-of-the-art methods on the PURE dataset by 47%.

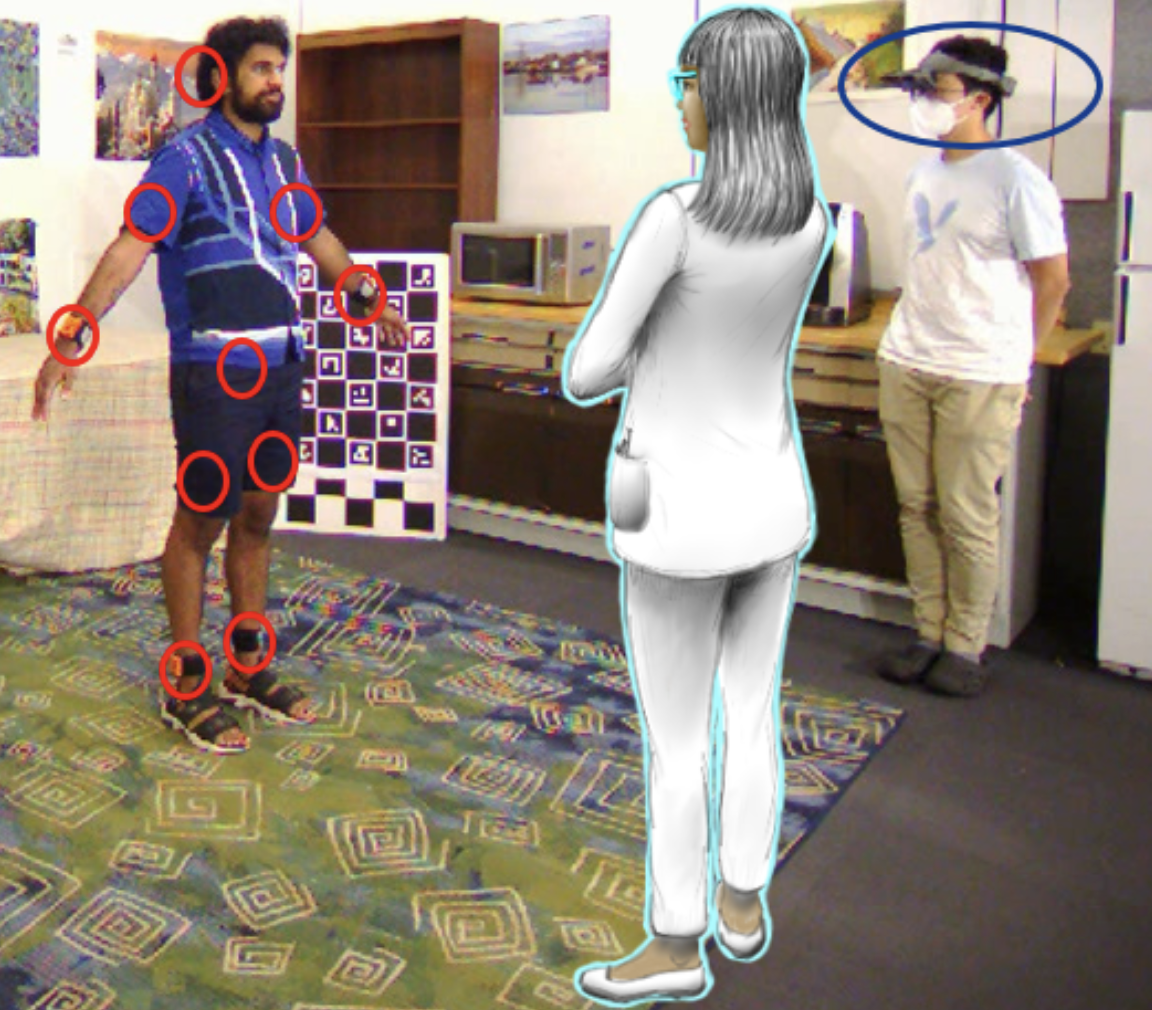

Reconstruction of Human Body Pose and Appearance Using Body-Worn IMUs and a Nearby Camera View for Collaborative Egocentric Telepresence

IEEE VR 2023 (ReDigiTS Workshop)

A collaborative 3D reconstruction method estimates a target person's body pose using worn IMUs and reconstructs their appearance via an external AR headset view from another nearby person. This approach aims to enable future anytime, anywhere telepresence through daily worn accessories.

Software

rPPG-Toolbox: Deep Remote PPG Toolbox

NeurIPS 2023

A comprehensive toolbox that contains unsupervised and supervised remote photoplethysmography (rPPG) models with support for public benchmark datasets, data augmentation, and systematic evaluation.